API

If you're looking for an API, you can choose from your desired programming language.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

import requests

import base64

# Use this function to convert an image file from the filesystem to base64

def image_file_to_base64(image_path):

with open(image_path, 'rb') as f:

image_data = f.read()

return base64.b64encode(image_data).decode('utf-8')

# Use this function to fetch an image from a URL and convert it to base64

def image_url_to_base64(image_url):

response = requests.get(image_url)

image_data = response.content

return base64.b64encode(image_data).decode('utf-8')

# Use this function to convert a list of image URLs to base64

def image_urls_to_base64(image_urls):

return [image_url_to_base64(url) for url in image_urls]

api_key = "YOUR_API_KEY"

url = "https://api.segmind.com/v1/bg-replace"

# Request payload

data = {

"image": image_url_to_base64("https://segmind-sd-models.s3.amazonaws.com/outputs/bg_replace_input.jpg"), # Or use image_file_to_base64("IMAGE_PATH")

"ref_image": image_url_to_base64("https://segmind-sd-models.s3.amazonaws.com/outputs/bg_input_reference.jpg"), # Or use image_file_to_base64("IMAGE_PATH")

"prompt": "Pefume bottle placed on top of a rock, in a jungle",

"negative_prompt": "bad quality, painting, blur",

"samples": 1,

"scheduler": "DDIM",

"num_inference_steps": 25,

"guidance_scale": 7.5,

"seed": 12467,

"strength": 1,

"cn_weight": 0.9,

"ip_adapter_weight": 0.5,

"base64": False

}

headers = {'x-api-key': api_key}

response = requests.post(url, json=data, headers=headers)

print(response.content) # The response is the generated imageAttributes

Input Image.

Reference Image

Prompt to render

Prompts to exclude, eg. 'bad anatomy, bad hands, missing fingers'

Number of samples to generate.

min : 1,

max : 4

Type of scheduler.

Allowed values:

Number of denoising steps.

min : 20,

max : 100

Scale for classifier-free guidance

min : 1,

max : 25

Seed for image generation.

min : -1,

max : 999999999999999

Scale for classifier-free guidance

min : 0,

max : 1

Scale for classifier-free guidance

min : 0,

max : 1

Scale for classifier-free guidance

min : 0,

max : 1

Base64 encoding of the output image.

To keep track of your credit usage, you can inspect the response headers of each API call. The x-remaining-credits property will indicate the number of remaining credits in your account. Ensure you monitor this value to avoid any disruptions in your API usage.

Background Replace

Background Replace (BG replace) is SDXL inpainting when paired with both ControlNet and IP Adapter conditioning capable of generating photo-realistic images. This model offers more flexibility by allowing the use of an image prompt along with a text prompt to guide the image generation process. This means you can use an image prompt as a reference and a text prompt to generate desired backgrounds which look very close to real life.

This model is a improvement over the ControlNet Inpainting model.

Key Components of Background Replace

ControlNet helps in preserving the composition of the subject in the image by clearly separating it from the background. This is crucial when you want to maintain the structure of the subject while changing the surrounding area.

IP Adapter allows to use an existing image as a reference, and a text prompt to specify the desired background. For example, if you have a picture of a perfume bottle and you want to change the background to a forest with the help of a reference image (or IP image) of a forest, you could use a text prompt like “Perfume bottle placed on top of a rock, in a jungle”. The IP Adapter then guides the inpainting process to replace the background of the image in a way that matches the reference image. So, in our example, the output image will have the perfume bottle on a rock in a forest setting.

How to use Background Replace

-

Input image: Input an image of an object or subject, preferably with a white background.

-

Reference Image: For the reference image, input any image you want as the new background for the object/subject in the input image.

-

Advanced Settings: Start with the default settings (recommended) and iteratively tweak them based on your preference for the generated outputs. Check the recommended settings to have better control over the image outputs.

Recommended Settings

In addition to parameters like Guidance scale, scheduler, and Strength, we recommend keeping a close eye on these two parameters, as they greatly influence the final image output.

CN Weight: This is ControlNet Weight. This setting determines the degree of influence the ControlNet conditioning (Canny Edge Detection) has on the original image. A higher weight means a greater influence. It is always recommended to keep the CN weight high to preserve the composition of the object/subject in the input image.

IP Adapter Weight: This is IP Image Weight. This setting determines the degree of influence the Image Prompt (reference image) has on the original image. A higher weight means a greater influence and vice versa. The recommended value is 0.5, as it is neither too high nor too low. A high value can be counterintuitive, as colors from the image prompt will start creeping into the subject/object in the input image.

Background Replace in Pixelflow Workflows

Background replace is used in many background generation and replacement workflows in Pixelflow. It is ideal for product photography use cases in the e-commerce industry. Some of the workflows that utilize BG replace include:

-

Furniture background Generator: Generates new backgrounds (indoor and outdoor) settings for a furniture piece.

-

Shoe background Generator: Generates new outdoor backgrounds for running shoes.

-

Jewelry Background Generator: Generates new backgrounds for jewelry.

Other Popular Models

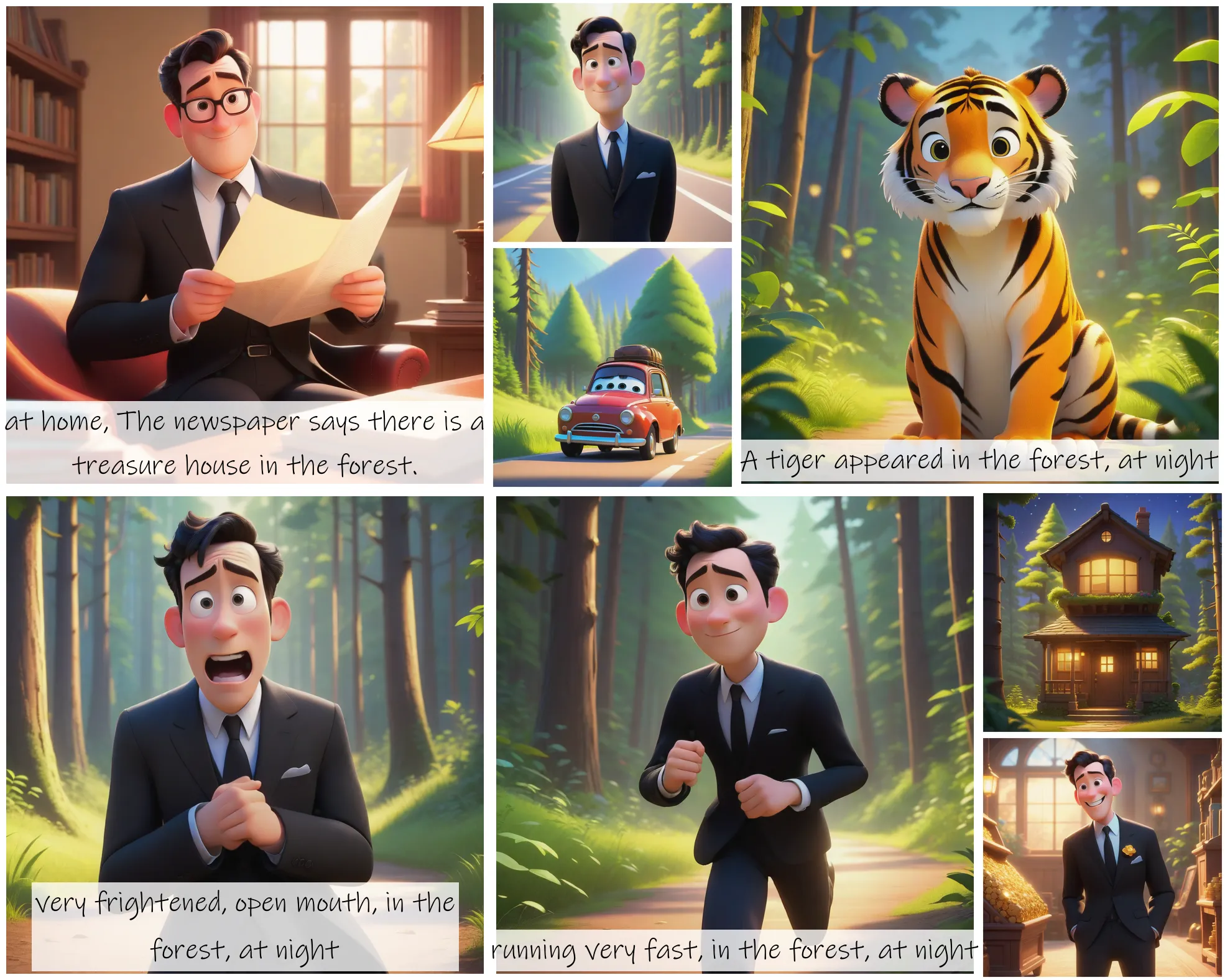

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

sadtalker

Audio-based Lip Synchronization for Talking Head Video

fooocus

Fooocus enables high-quality image generation effortlessly, combining the best of Stable Diffusion and Midjourney.

sdxl-inpaint

This model is capable of generating photo-realistic images given any text input, with the extra capability of inpainting the pictures by using a mask