PixelFlow allows you to use all these features

Unlock the full potential of generative AI with Segmind. Create stunning visuals and innovative designs with total creative control. Take advantage of powerful development tools to automate processes and models, elevating your creative workflow.

Segmented Creation Workflow

Gain greater control by dividing the creative process into distinct steps, refining each phase.

Customized Output

Customize at various stages, from initial generation to final adjustments, ensuring tailored creative outputs.

Layering Different Models

Integrate and utilize multiple models simultaneously, producing complex and polished creative results.

Workflow APIs

Deploy Pixelflows as APIs quickly, without server setup, ensuring scalability and efficiency.

Kling AI Image-to-Video Generation

Kling AI, developed by the Kuaishou AI Team, is a sophisticated AI model designed to transform static images into dynamic, high-quality videos. This model leverages advanced AI technologies to offer unparalleled video generation capabilities, making it an essential tool for content creators, marketers, and educators.

Key Features of Kling AI Image-to-Video

-

Dynamic-Resolution Training: The model’s dynamic-resolution training strategy allows it to create visually appealing content in various aspect ratios. This flexibility ensures that Kling AI can adapt to different video formats, making it suitable for a wide range of applications

-

KLING AI utilizes advanced 3D space-time attention and diffusion transformer technologies to accurately model movements and create imaginative scenes efficiently.

-

Kling AI supports the generation of videos up to 5s & 10s in length. This capability is particularly beneficial for creating comprehensive visual narratives and detailed educational content

How to use Kling AI Image-to-Video

-

Uploading an Image: Start by uploading an image that will serve as the initial frame of your video.

-

Drafting the Prompt: Provide a detailed text prompt that describes the desired video. Include specifics such as scene settings, character actions, and camera movements. For example, “A serene beach at sunset with waves gently crashing and seagulls flying overhead.”

-

Generating the Video: Enter your prompt into the designated text field and initiate the video generation process. Kling AI will process the input and create a video based on your description.

-

Customizing Output Settings: Adjust the output settings to match your project requirements. You can select the resolution, aspect ratio, and video length to ensure the final product meets your needs.

Best Practices for Optimal Results

-

Detailed Descriptions: The more specific and descriptive your text prompt, the better the AI can interpret and visualize your ideas. Include details about lighting, colors, and movements to enhance the realism of the generated video.

-

Iterative Refinement: Experiment with different prompts and settings to refine the output. Iterative adjustments allow you to achieve the best possible results by fine-tuning the input parameters.

-

High-Quality Image Inputs: Use high-resolution images to ensure that the initial frame of your video is clear and detailed. This will enhance the overall quality of the generated video.

Be sure to read Kling's AI Video Guide for more tips on how to use this model. https://docs.qingque.cn/d/home/eZQDvlYrDMyE9lOforCeWA4KP

Other Popular Models

sdxl-controlnet

SDXL ControlNet gives unprecedented control over text-to-image generation. SDXL ControlNet models Introduces the concept of conditioning inputs, which provide additional information to guide the image generation process

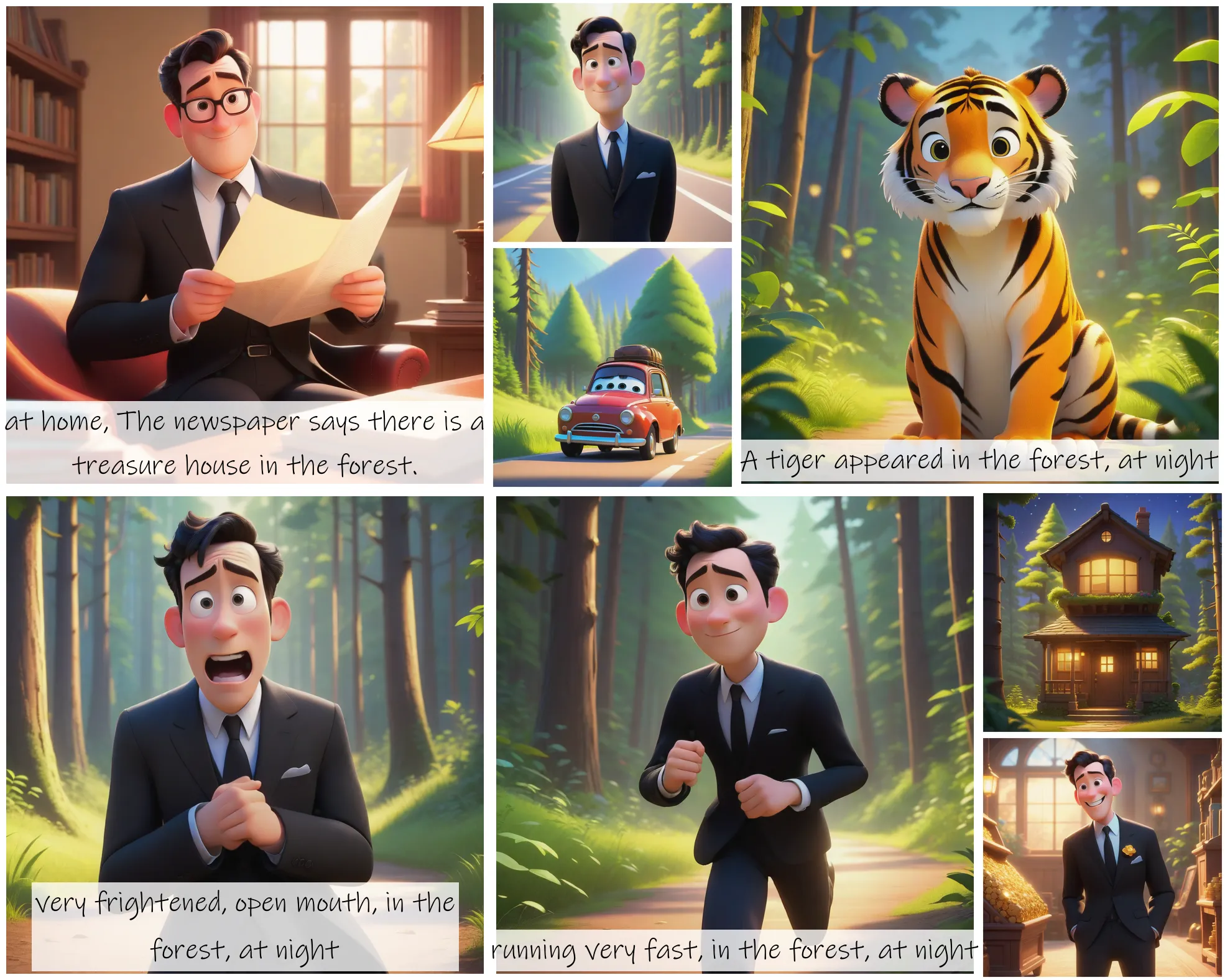

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

fooocus

Fooocus enables high-quality image generation effortlessly, combining the best of Stable Diffusion and Midjourney.

sd2.1-faceswapper

Take a picture/gif and replace the face in it with a face of your choice. You only need one image of the desired face. No dataset, no training