PixelFlow allows you to use all these features

Unlock the full potential of generative AI with Segmind. Create stunning visuals and innovative designs with total creative control. Take advantage of powerful development tools to automate processes and models, elevating your creative workflow.

Segmented Creation Workflow

Gain greater control by dividing the creative process into distinct steps, refining each phase.

Customized Output

Customize at various stages, from initial generation to final adjustments, ensuring tailored creative outputs.

Layering Different Models

Integrate and utilize multiple models simultaneously, producing complex and polished creative results.

Workflow APIs

Deploy Pixelflows as APIs quickly, without server setup, ensuring scalability and efficiency.

Llama 4 Scout Instruct Basic

The Llama 4 Scout Instruct basic model represents a new era in the Llama ecosystem, offering native multimodal AI capabilities for enhanced text and image understanding. This efficient model, with its 17 billion active parameters and 16 experts, is designed for developers and researchers seeking industry-leading performance in processing both text and visual data.

Llama 4 Scout Instruct Basic

-

Natively Multimodal AI - This model inherently understands and processes both text and image data, enabling richer and more versatile applications beyond traditional text-based models. This capability opens doors for tasks involving visual content analysis, image captioning, and multimodal reasoning.

-

Mixture-of-Experts Architecture - By leveraging a sophisticated architecture with 16 experts, the Llama 4 Scout model can selectively activate specific parts of its network to handle different types of input, leading to improved performance and efficiency. This allows the model to achieve high accuracy while maintaining computational feasibility.

-

Efficient active 17 Billion Parameter Model - Despite its powerful capabilities, Llama 4 Scout is designed to be an efficient model, making it practical for a wider range of deployment scenarios and reducing computational costs compared to larger models.

-

Industry-Leading Performance in Text and Image Understanding - The model offers state-of-the-art performance in interpreting and reasoning about both textual and visual information, making it a valuable asset for applications requiring deep understanding of complex data.

Use Cases

-

Content Moderation Systems - Analyzing both text and images to identify harmful or inappropriate content, improving the accuracy and scope of moderation across various online platforms.

-

Image Captioning and Generation - Automatically generating descriptive captions for images or creating new images based on textual prompts, benefiting areas like accessibility and creative content creation.

-

Multimodal Information Retrieval - Enabling search applications to understand queries involving both text and images, allowing users to find information in a more intuitive and comprehensive way.

-

Educational Tools - Developing interactive learning experiences that can understand and respond to both textual questions and visual inputs, creating more engaging and effective educational resources.

-

E-commerce Product Understanding - Analyzing product images and descriptions to gain a deeper understanding of product features and customer reviews, aiding in recommendations and inventory management.

-

Robotics and Autonomous Systems - Providing robots with the ability to understand their environment through both visual and textual cues, enhancing their navigation and interaction capabilities.

Other Popular Models

sdxl-img2img

SDXL Img2Img is used for text-guided image-to-image translation. This model uses the weights from Stable Diffusion to generate new images from an input image using StableDiffusionImg2ImgPipeline from diffusers

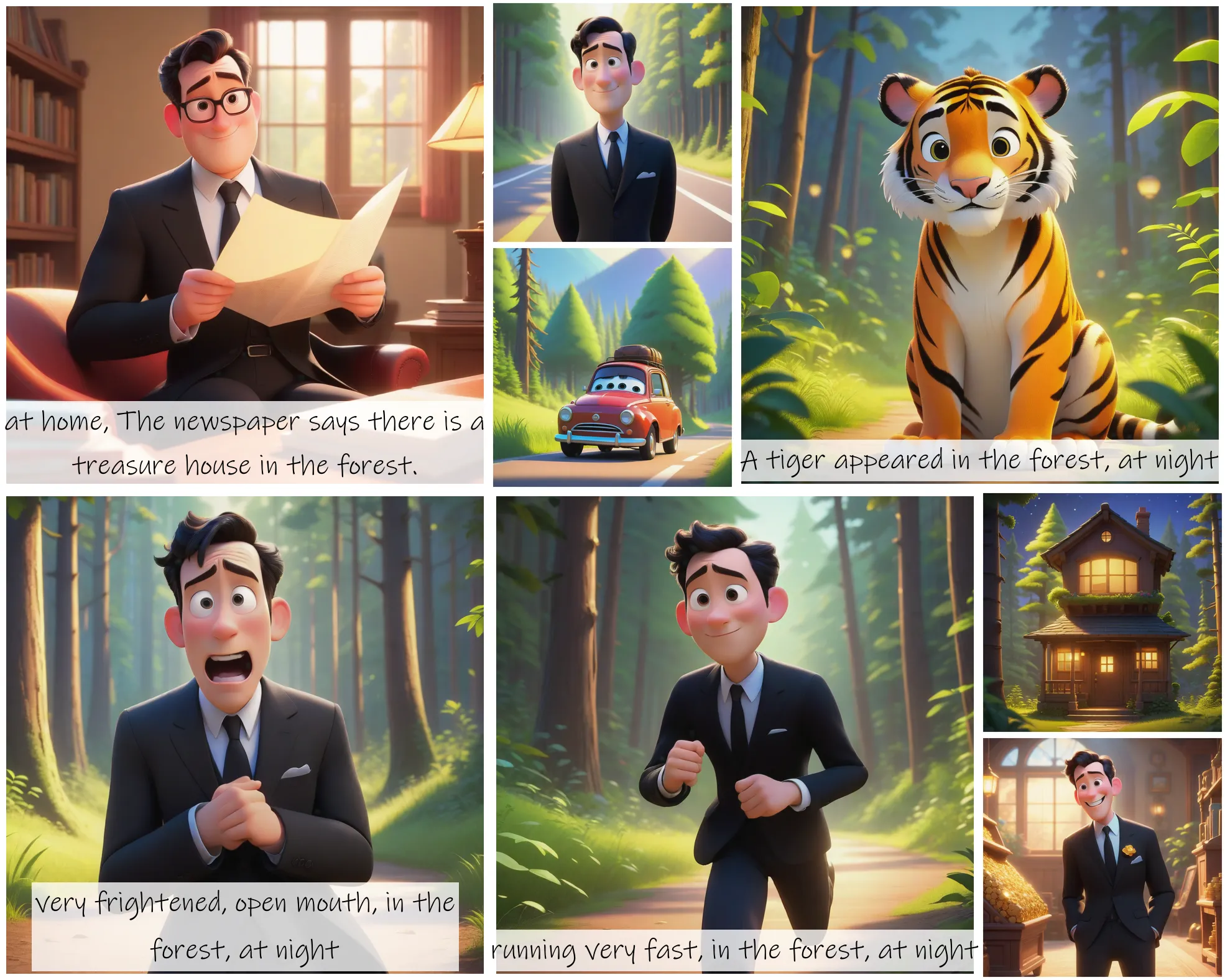

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

fooocus

Fooocus enables high-quality image generation effortlessly, combining the best of Stable Diffusion and Midjourney.

sdxl1.0-txt2img

The SDXL model is the official upgrade to the v1.5 model. The model is released as open-source software