Luma Video (Image to Video)

Luma’s Image-to-Video leverages state-of-the-art AI technology to convert visual inputs into high-fidelity video outputs. This tool is designed for professionals seeking to streamline their content creation process with precision and efficiency. The model can produce cinema-grade videos at 1080p resolution, ensuring that the generated content is visually stunning and suitable for professional use across various industries.

Key Features of Luma Image to Video

High-Fidelity Video Generation:

-

Realistic Visuals: Utilizing advanced neural networks, Dream Machine generates videos with high accuracy in motion dynamics, object interactions, and environmental consistency.

-

Rapid Processing: Capable of producing 120 frames in 120 seconds, facilitating quick iterations and extensive experimentation.

-

Image-to-Video: Upload static images to create dynamic video content, employing sophisticated image analysis and transformation algorithms.

Scalability and Efficiency

-

Transformer-Based Architecture: Built on a transformer model trained on extensive video datasets, ensuring scalability and computational efficiency.

-

Universal Imagination Engine: Represents the initial phase of Luma’s broader initiative to develop a comprehensive imagination engine capable of diverse content generation tasks.

Creative and Technical Flexibility:

-

Cinematic Quality: Incorporates advanced camera motion algorithms to produce fluid, cinematic video sequences that align with the narrative and emotional tone of the input.

-

Versatile Camera Movements: The model allows users to experiment with a variety of fluid and naturalistic camera motions that align with the emotional tone and content of the scene. This flexibility enhances the storytelling aspect, enabling creators to convey their message more effectively. Just type the word "camera" followed by direction in your text prompt. Some camera motions you can try: Move Left/Right, Move Up/Down, Push In/Out, Pan Left/Right, Orbit Left/Right, Crane Up/Down.

-

Flexible Aspect Ratios: Dream Machine supports various video aspect ratios, allowing creators to tailor their outputs for different platforms, from social media to widescreen presentations. This flexibility is crucial for meeting diverse content needs.

Use Cases

-

Marketing Campaigns: Create engaging promotional videos that resonate with your target audience.

-

Educational Content: Transform educational materials into visually appealing videos that enhance learning experiences.

-

Social Media: Generate eye-catching content for platforms like Instagram, TikTok, and YouTube to increase engagement.

Other Popular Models

sdxl-img2img

SDXL Img2Img is used for text-guided image-to-image translation. This model uses the weights from Stable Diffusion to generate new images from an input image using StableDiffusionImg2ImgPipeline from diffusers

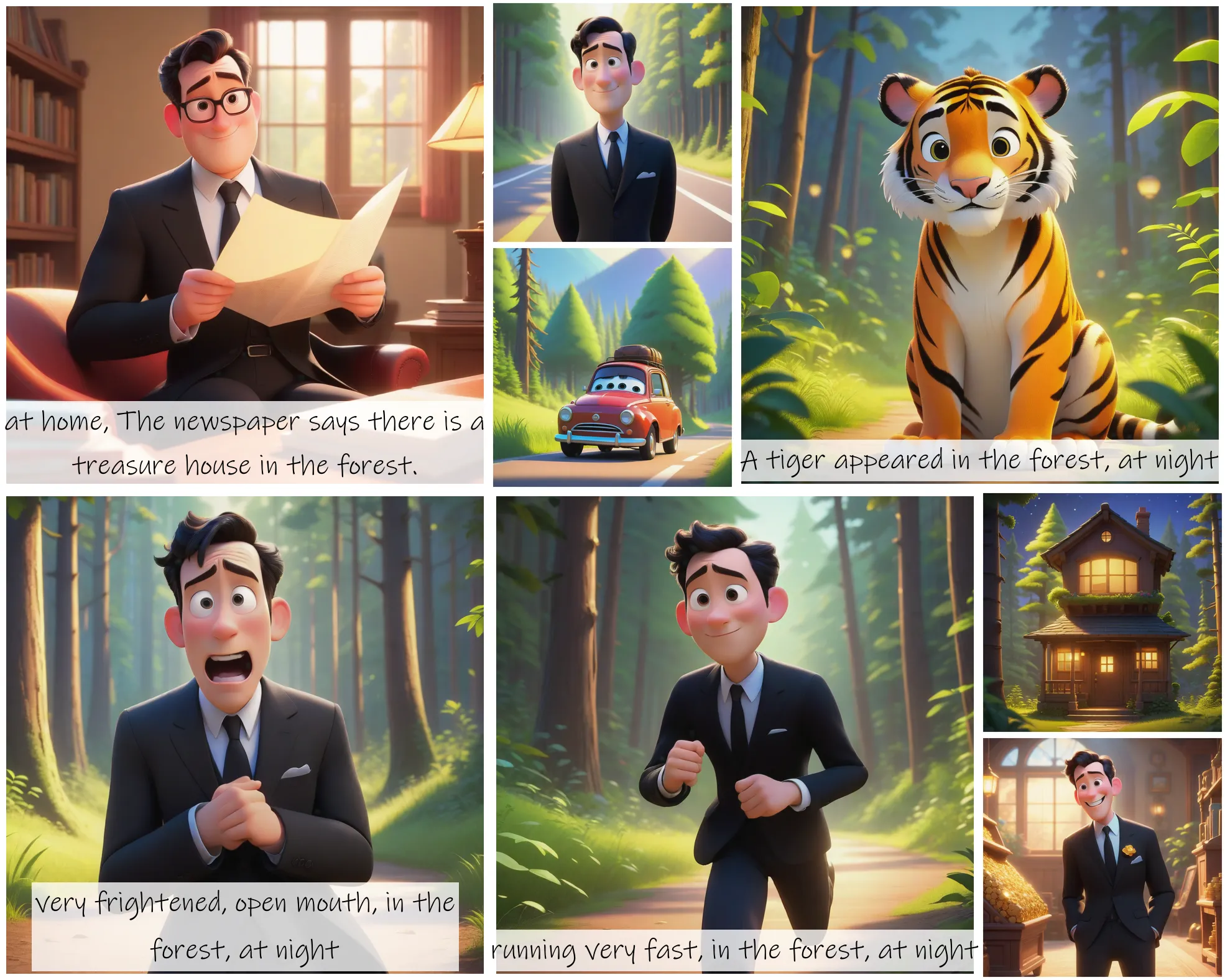

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

fooocus

Fooocus enables high-quality image generation effortlessly, combining the best of Stable Diffusion and Midjourney.

codeformer

CodeFormer is a robust face restoration algorithm for old photos or AI-generated faces.