MusicGen: Transforming Text into Music

MusicGen by Meta is an advanced text-to-music model designed to generate high-quality music samples from text descriptions or audio prompts. Leveraging a single-stage auto-regressive Transformer architecture, MusicGen is trained on a 32kHz EnCodec tokenizer with four codebooks sampled at 50 Hz. This innovative approach allows for efficient and high-fidelity music generation.

Key Features of MusicGen

-

Text-to-Music Generation: Converts textual descriptions into diverse and high-quality music samples.

-

Auto-Regressive Transformer: Utilizes a single-stage auto-regressive Transformer model for seamless music generation.

-

Efficient Training: Trained on a 32kHz EnCodec tokenizer with four codebooks, enabling efficient processing and high-quality output.

-

Parallel Prediction: Introduces a small delay between codebooks, allowing parallel prediction and reducing the number of auto-regressive steps to 50 per second of audio.

Use cases

-

Music Production: Generate unique music tracks based on textual descriptions for use in various media.

-

Creative Projects: Enhance creative projects with custom-generated music that matches specific themes or moods.

-

Interactive Experiences: Integrate into interactive applications to provide dynamic and responsive musical experiences.

Other Popular Models

sdxl-img2img

SDXL Img2Img is used for text-guided image-to-image translation. This model uses the weights from Stable Diffusion to generate new images from an input image using StableDiffusionImg2ImgPipeline from diffusers

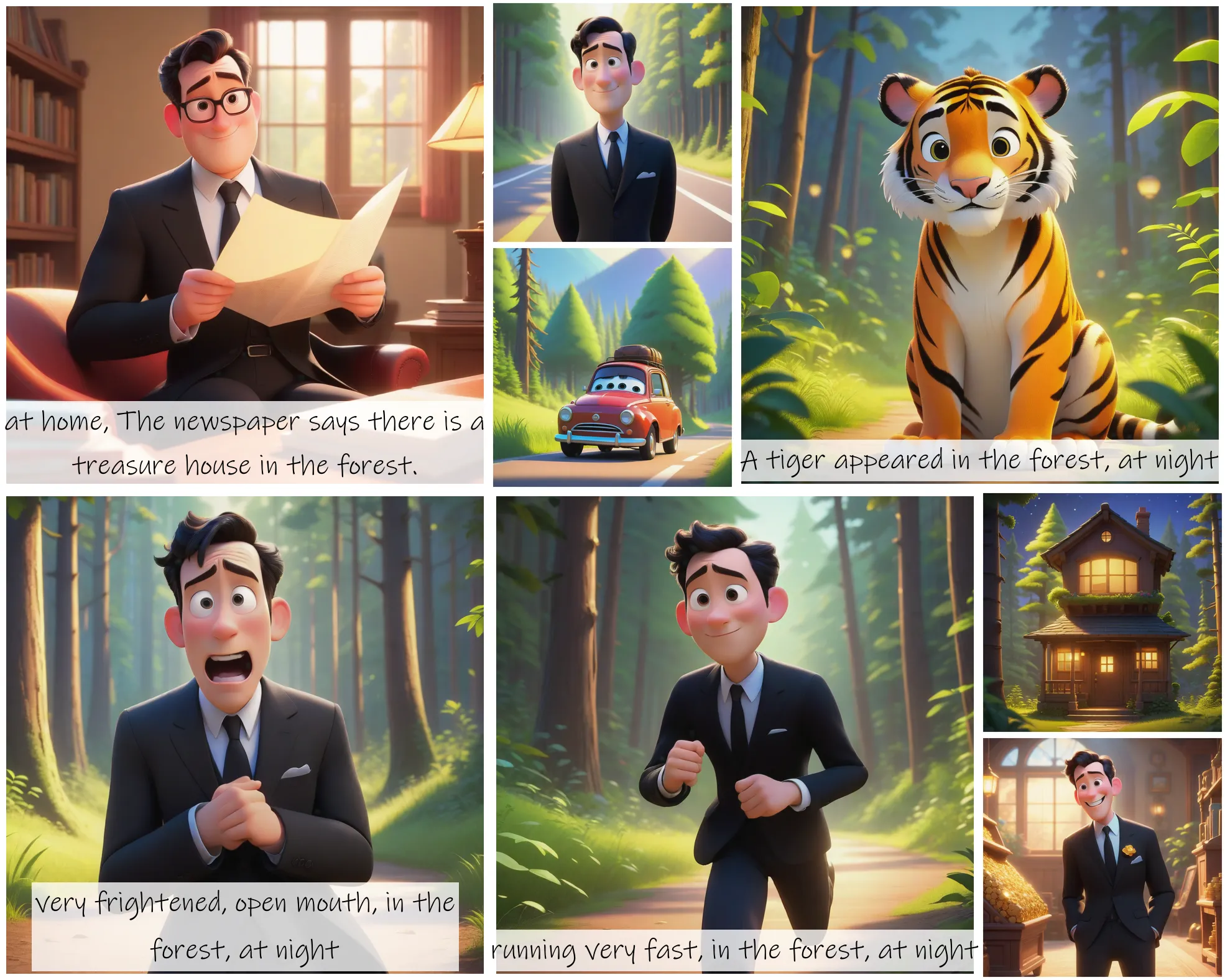

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

idm-vton

Best-in-class clothing virtual try on in the wild

codeformer

CodeFormer is a robust face restoration algorithm for old photos or AI-generated faces.