Mochi 1

Mochi 1 is a cutting-edge, open-source AI model that transforms text prompts into stunning, high-fidelity videos. Create captivating videos from simple text prompts with unparalleled quality and realism. Experience high-fidelity motion, strong prompt adherence, and limitless creative possibilities

API

If you're looking for an API, you can choose from your desired programming language.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

import requests

import base64

# Use this function to convert an image file from the filesystem to base64

def image_file_to_base64(image_path):

with open(image_path, 'rb') as f:

image_data = f.read()

return base64.b64encode(image_data).decode('utf-8')

# Use this function to fetch an image from a URL and convert it to base64

def image_url_to_base64(image_url):

response = requests.get(image_url)

image_data = response.content

return base64.b64encode(image_data).decode('utf-8')

# Use this function to convert a list of image URLs to base64

def image_urls_to_base64(image_urls):

return [image_url_to_base64(url) for url in image_urls]

api_key = "YOUR_API_KEY"

url = "https://api.segmind.com/v1/mochi-1"

# Request payload

data = {

"prompt": "Create a short, looped animation of a small, fluffy creature with big, round eyes and soft fur, similar to a baby Pikachu, emulating the adorable curiosity of baby animals. The creature should be sitting in a human hand, surrounded by a soft, natural forest setting with tiny flowers and gentle lighting. Subtle, lifelike movements include slight head tilts, gentle blinking, and small, curious paw or ear twitches. Its large eyes should glimmer with curiosity and warmth, occasionally widening as it looks around its environment, embodying the innocent curiosity and playfulness of a young animal. Keep the lighting soft, creating a warm, inviting atmosphere with faint sunlight filtering through, highlighting the softness of its fur.",

"negative_prompt": "blurry, low quality, distorted wings",

"guidance_scale": 4.5,

"fps": 16,

"steps": 30,

"seed": 985521,

"frames": 52

}

headers = {'x-api-key': api_key}

response = requests.post(url, json=data, headers=headers)

print(response.content) # The response is the generated imageAttributes

Prompt to generate video

Negative Prompt

Classifier-free guidance scale for text prompt

min : 2,

max : 10

Output frames per second

min : 7,

max : 30

Number of denoising steps

min : 10,

max : 75

Random seed.

Total frames to be generated

min : 1,

max : 160

To keep track of your credit usage, you can inspect the response headers of each API call. The x-remaining-credits property will indicate the number of remaining credits in your account. Ensure you monitor this value to avoid any disruptions in your API usage.

Mochi 1

Mochi 1 is a groundbreaking open-source AI model developed by Genmo AI, designed to create stunning, high-quality videos from simple text prompts. With its 10 billion parameter architecture, Mochi 1 delivers smooth, realistic motion at 30 frames per second. This state-of-the-art model sets new standards in video generation with its strong prompt adherence and high-fidelity motion.

Key Features of Mochi 1

-

High-Fidelity Motion: Generates smooth, realistic motion at 30fps, ensuring that the generated videos are not only visually appealing but also seamless and natural-looking.

-

Strong Prompt Adherence: With Mochi 1's ability to follow textual prompts accurately, users can expect their visions to be faithfully represented in the resulting videos. This makes it an invaluable tool for storytelling, educational content, and creative projects.

-

Open-Source Accessibility: Being available under the Apache 2.0 license means that Mochi 1 is accessible to a wide audience, promoting collaboration and innovation. Users can leverage this powerful tool for both personal and commercial purposes without restrictions.

-

Versatile Applications: Mochi 1's versatility allows it to be used across various domains, including research, product development, creative expression, marketing, and more. Its adaptability makes it a go-to choice for anyone looking to incorporate video content into their work.

Quick Guide to Use Mochi 1

-

Prompt: Provide the text prompt that you want to use to generate the video. This is the main input that will drive the video creation.

-

Negative Prompt (optional): If desired, you can add a negative prompt. This will help refine the generated video by excluding certain elements.

-

Guidance Scale: Adjust the guidance scale to control the strength of the guidance during the generation process.

-

FPS (Frames per Second): Set the desired frames per second for the output video.

-

Steps: Specify the number of denoising steps to perform during generation.

-

Seed: Set the random seed value to ensure reproducibility of the generated video.

-

Frames: Determine the total number of frames to generate for the video.

-

Duration of the video: For duration of the output video, divide the "Frames" value by the "FPS" value. For example, if you have 52 frames and 16 FPS, the video duration will be 52 / 16 = 3.25 seconds.

Use cases

-

Animated Short Films: Create high-quality, animated short films by providing simple text descriptions.

-

Educational Videos: Produce engaging educational content, such as science experiments, historical reenactments, or language learning aids.

-

Social Media Content: Generate eye-catching videos for social media campaigns to increase engagement and reach.

-

Artistic Projects: Use Mochi 1 to create unique video art pieces, exploring new forms of creative expression.

Other Popular Models

sdxl-controlnet

SDXL ControlNet gives unprecedented control over text-to-image generation. SDXL ControlNet models Introduces the concept of conditioning inputs, which provide additional information to guide the image generation process

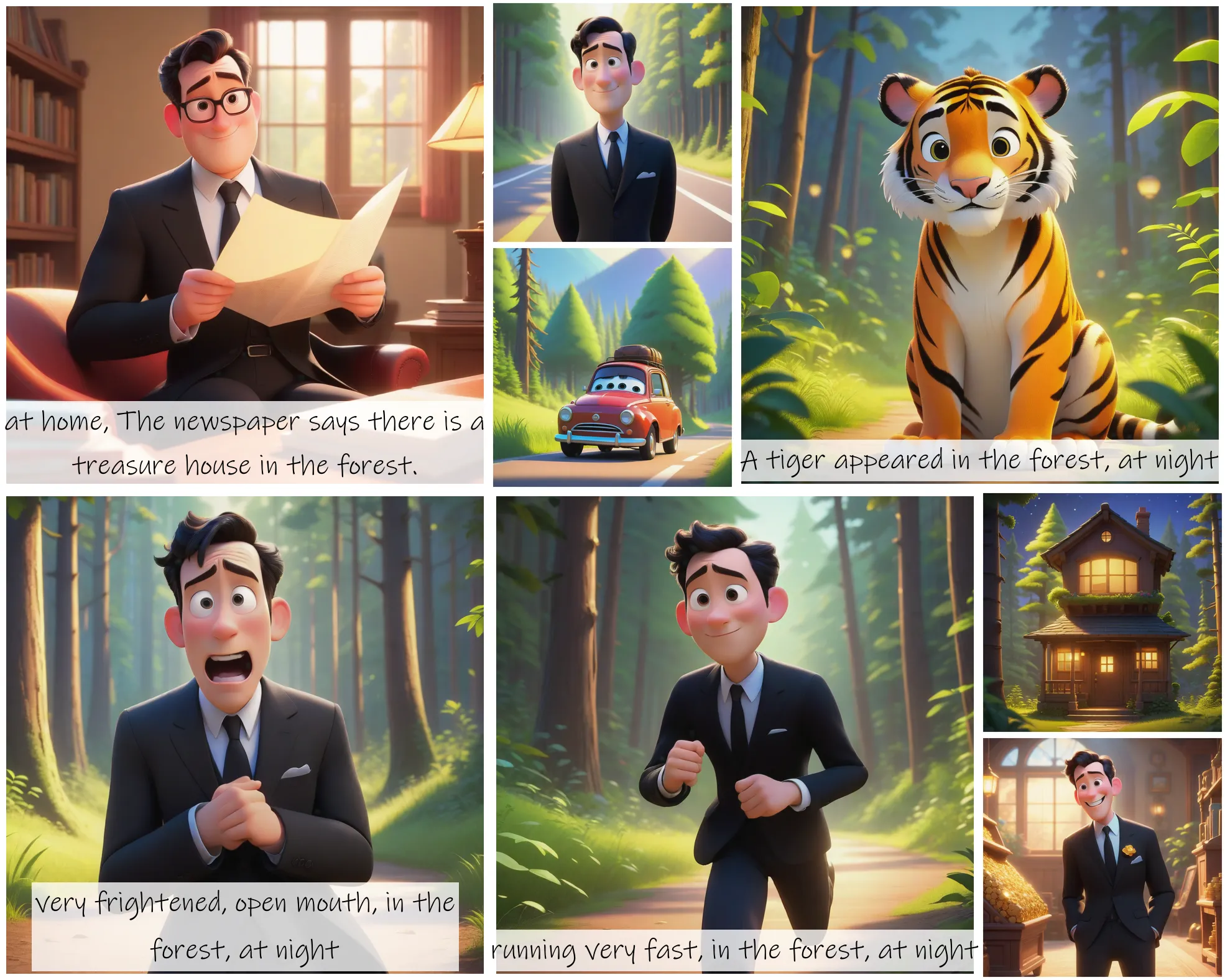

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

sdxl1.0-txt2img

The SDXL model is the official upgrade to the v1.5 model. The model is released as open-source software

codeformer

CodeFormer is a robust face restoration algorithm for old photos or AI-generated faces.