QWEN2-VL-7B-Instruct

The Qwen2-VL-7B-Instruct is a cutting-edge vision-language model with 7 billion parameters, offering advanced capabilities like object recognition, image analysis and visual localization. It can also generate structured outputs and is optimized for both performance and flexibility. It can recognize objects, analyze image content, act as a visual agent, and generate structured data.

Qwen2-VL-7B-Instruct

The Qwen2-VL-7B-Instruct model is a cutting-edge vision-language model from the Qwen family, designed to understand and interact with both visual and textual data. It builds upon the foundation of previous Qwen-VL models and introduces several key enhancements. This model is instruction-tuned and contains 7 billion parameters.

Key Features of Qwen2-VL-7B-Instruct

-

Enhanced Visual Understanding: Qwen2-VL is capable of recognizing common objects like plants, animals, and insects, as well as analyzing text, charts, icons, graphics, and layouts within images

-

Qwen2-VL can generate structured outputs for data like invoices, forms, and tables, which is useful for applications in finance and commerce

-

Object Recognition: The model is proficient in recognizing common objects such as flowers, birds, fish, and insects.

-

Image Analysis: Beyond object recognition, Qwen2-VL can analyze texts, charts, icons, graphics, and layouts within images.

-

The model can act as a visual agent, reasoning and directing tools for computer and phone use

-

The model can accurately locate objects in an image by generating bounding boxes or points and provide stable JSON outputs for coordinates and attributes

-

The model supports a wide range of input resolutions. You can adjust the min_pixels and max_pixels to balance performance and computation cost. You can also directly set the resized_height and resized_width

-

he model shows strong performance on various image and video benchmarks. For example, it achieves a score of 60 on the MMMUval benchmark, 95.7 on the DocVQAtest benchmark, and 69.6 on the MVBench benchmark.

Limitation of Qwen2-VL-7B-Instruct

The Qwen2-VL-7B-Instruct model, while powerful, does have some limitations:

-

Data Timeliness: The image dataset used to train the model is only updated until June 2023. Therefore, information after this date may not be covered by the model.

-

Limited Recognition of Individuals and Intellectual Property (IP): The model has a limited capacity to recognize specific individuals or IPs. It may not be able to identify all well-known personalities or brands.

-

Limited Capacity for Complex Instructions: The model's understanding and execution capabilities may require improvement when faced with intricate, multi-step instructions.

-

Insufficient Counting Accuracy: The model's accuracy in counting objects, especially in complex scenes, is not high.

-

Weak Spatial Reasoning Skills: The model's ability to infer positional relationships between objects, particularly in 3D spaces, is inadequate. It may have difficulty judging the relative positions of objects.

-

YaRN impact: While the model supports the use of YaRN for processing long texts, it has a significant negative impact on the performance of temporal and spatial localization tasks and is not recommended.

Other Popular Models

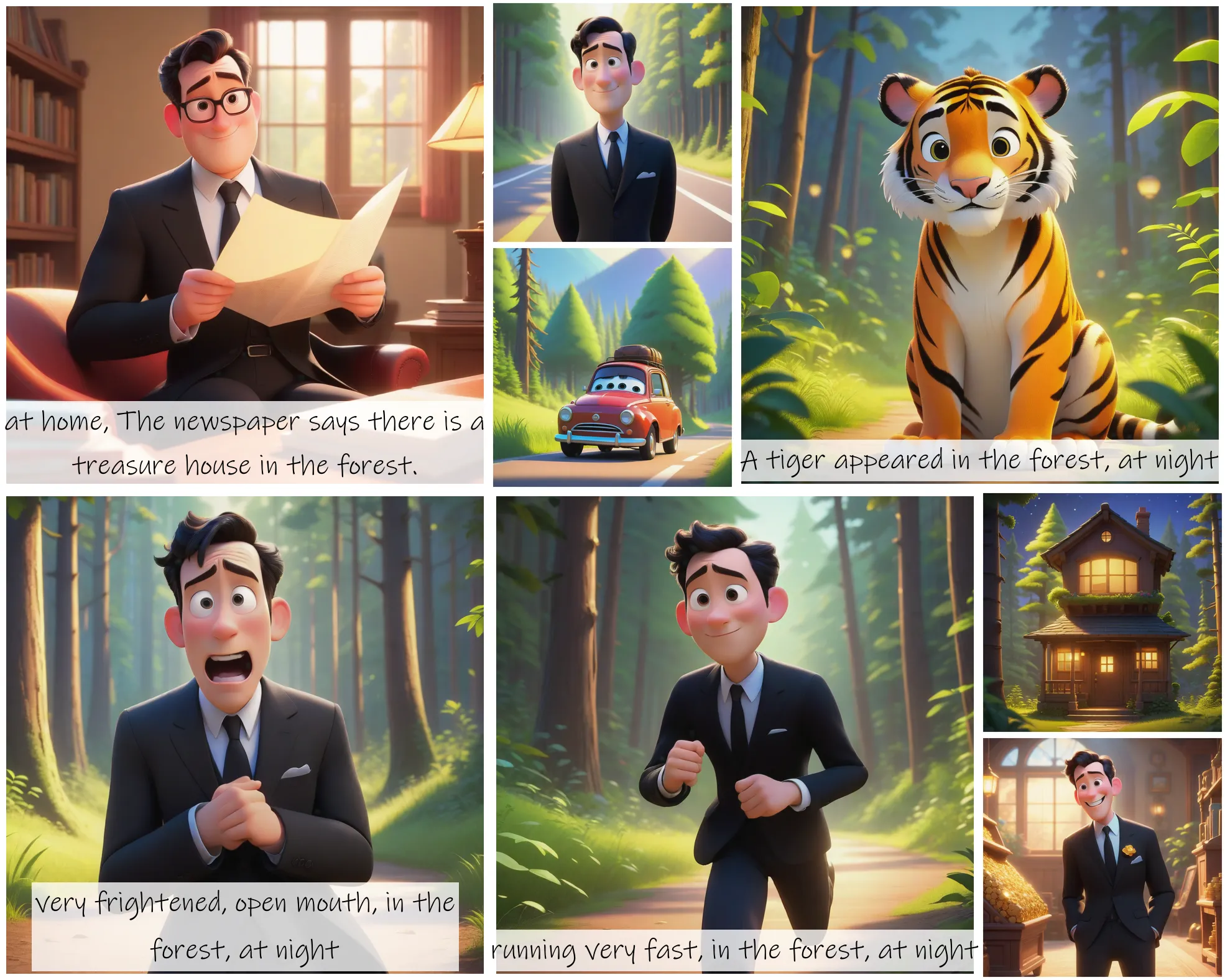

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

fooocus

Fooocus enables high-quality image generation effortlessly, combining the best of Stable Diffusion and Midjourney.

faceswap-v2

Take a picture/gif and replace the face in it with a face of your choice. You only need one image of the desired face. No dataset, no training

codeformer

CodeFormer is a robust face restoration algorithm for old photos or AI-generated faces.