Stable Diffusion 2.1

Stable Diffusion is a type of latent diffusion model that can generate images from text. It was created by a team of researchers and engineers from CompVis, Stability AI, and LAION. Stable Diffusion v2 is a specific version of the model architecture. It utilizes a downsampling-factor 8 autoencoder with an 865M UNet and OpenCLIP ViT-H/14 text encoder for the diffusion model. When using the SD 2-v model, it produces 768x768 px images. It uses the penultimate text embeddings from a CLIP ViT-H/14 text encoder to condition the generation process.

PixelFlow allows you to use all these features

Unlock the full potential of generative AI with Segmind. Create stunning visuals and innovative designs with total creative control. Take advantage of powerful development tools to automate processes and models, elevating your creative workflow.

Segmented Creation Workflow

Gain greater control by dividing the creative process into distinct steps, refining each phase.

Customized Output

Customize at various stages, from initial generation to final adjustments, ensuring tailored creative outputs.

Layering Different Models

Integrate and utilize multiple models simultaneously, producing complex and polished creative results.

Workflow APIs

Deploy Pixelflows as APIs quickly, without server setup, ensuring scalability and efficiency.

Stable Diffusion 2.1

Stable Diffusion 2.1 is a state-of-the-art machine learning model introduced in 2022, primarily designed for High-Resolution Image Synthesis with Latent Diffusion. The model excels in generating detailed and high-quality images conditioned on text descriptions. In addition to this, it can be effectively utilized for a variety of tasks such as inpainting, outpainting, and generating image-to-image translations guided by a text prompt.

Stable Diffusion v2 denotes a particular setup of the model's structure that employs an autoencoder with a downsampling factor of 8, an 865M UNet, and an OpenCLIP ViT-H/14 text encoder for the diffusion process. This configuration of the Stable Diffusion v2 model is capable of generating output images with a resolution of 768x768 pixels. The model's architecture is divided into three main components: an encoder module, a u-net architecture, and an autoencoder. The encoder module is the first step in the process, where it interprets the input text and generates tokens for the downstream models. These tokens are a representation of the input text that the rest of the model can understand and use to generate the final image.

The tokens generated by the encoder module are then passed to the u-net architecture, which is where the diffusion process takes place. This process involves searching for an image in a latent space, a high-dimensional space where similar images are close together. The model starts with a noisy image and gradually refines it through 20 to 30 steps of the diffusion process, resulting in a sensible image. Finally, the tokens generated by the u-net are passed to an autoencoder, which generates the final image. The autoencoder takes the tokens as an array and uses them to generate the final image that you see on the screen. This multi-step process allows Stable Diffusion 2.1 to generate high-quality images from text descriptions, making it a powerful tool for a variety of applications.

Its ability to generate high-resolution images from text descriptions opens up a world of possibilities for content creation. The model's architecture, combining an encoder, a u-net, and an autoencoder, allows for a robust and versatile image generation process. Furthermore, the model's diffusion process ensures the generation of sensible and high-quality images, even from initially noisy inputs.

Applications and use caes of Stable Diffusion 2.1

Stable Diffusion 2.1's ability to generate high-quality images from text descriptions has a wide range of applications across various industries. Here are some of the key use cases:

-

Character Creation for Visual Effects: In the film and gaming industries, Stable Diffusion 2.1 can be used to create detailed and unique characters based on text descriptions. This can significantly speed up the character design process and allow for the creation of characters that might be difficult to design manually.

-

E-Commerce Product Imaging: For e-commerce, Stable Diffusion 2.1 can generate different views of a product based on a few images and text descriptions. This can eliminate the need for costly and time-consuming product photo shoots.

-

Image Editing: Stable Diffusion 2.1 can be used to edit images in a variety of ways, such as changing the color of objects, adding or removing elements, or changing the background. This can be particularly useful for photo editing apps or services.

-

Fashion: In the fashion industry, Stable Diffusion 2.1 can be used to generate images of clothing items in different colors or styles, or to show what a person would look like wearing a particular item of clothing. This can provide a more interactive and personalized shopping experience for customers.

-

Gaming Asset Creation: Stable Diffusion 2.1 can be used to generate assets for video games, such as characters, environments, or items. This can significantly speed up the game development process and allow for the creation of unique and detailed game assets.

-

Web Design: For web design, Stable Diffusion 2.1 can generate images for website layouts or themes based on text descriptions. This can make the web design process more efficient and allow for the creation of unique and personalized website designs.

Stable Diffusion 2.1 license

The license for the Stable Diffusion 2.1 model, known as the "CreativeML Open RAIL-M" license, is designed to promote both open and responsible use of the model. You may add your own copyright statement to your modifications and provide additional or different license terms for your modifications. You are accountable for the output you generate using the model, and no use of the output can contravene any provision as stated in the license.

Other Popular Models

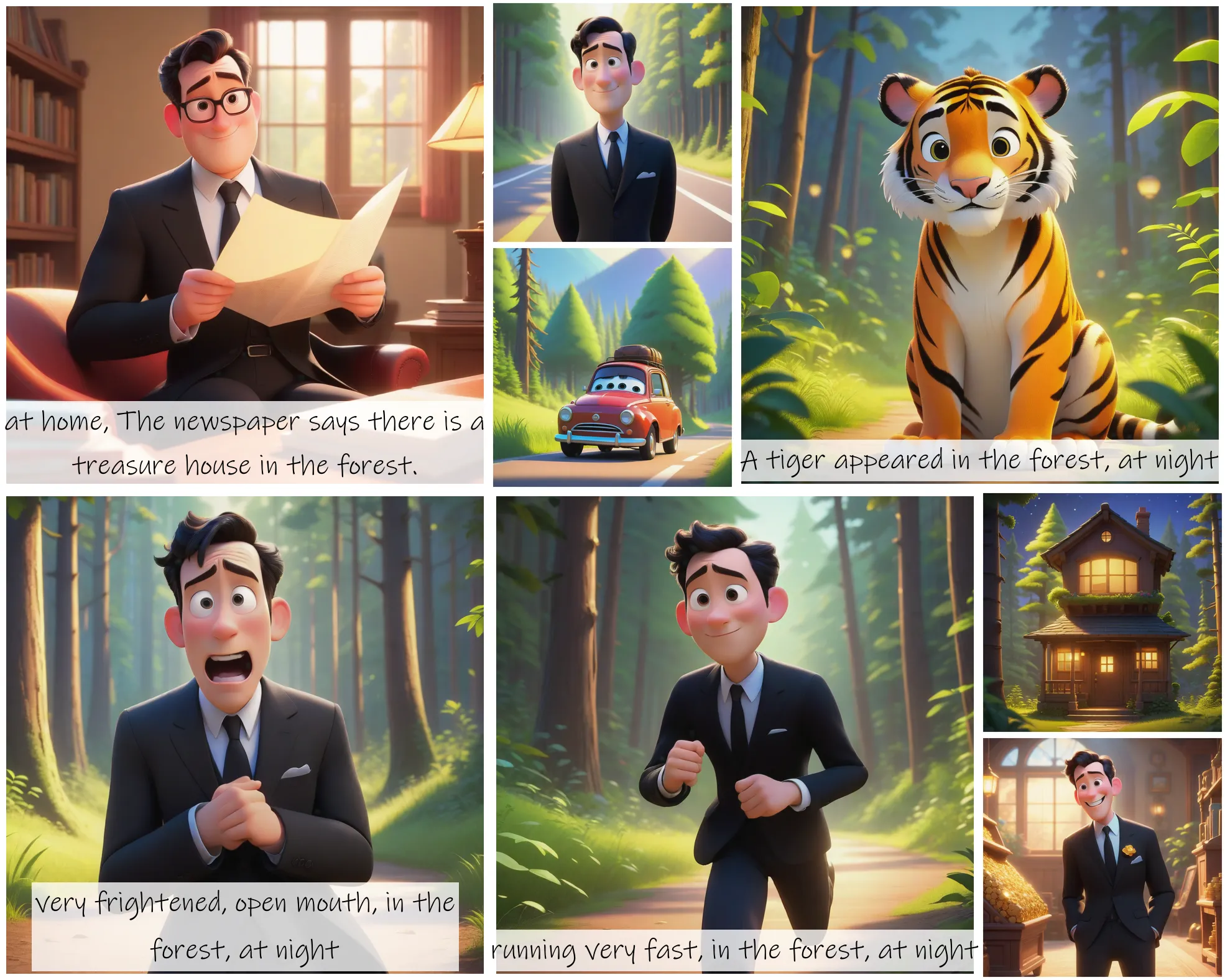

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

face-to-many

Turn a face into 3D, emoji, pixel art, video game, claymation or toy

insta-depth

InstantID aims to generate customized images with various poses or styles from only a single reference ID image while ensuring high fidelity

sdxl-inpaint

This model is capable of generating photo-realistic images given any text input, with the extra capability of inpainting the pictures by using a mask