API

If you're looking for an API, you can choose from your desired programming language.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

import requests

import base64

# Use this function to convert an image file from the filesystem to base64

def image_file_to_base64(image_path):

with open(image_path, 'rb') as f:

image_data = f.read()

return base64.b64encode(image_data).decode('utf-8')

# Use this function to fetch an image from a URL and convert it to base64

def image_url_to_base64(image_url):

response = requests.get(image_url)

image_data = response.content

return base64.b64encode(image_data).decode('utf-8')

# Use this function to convert a list of image URLs to base64

def image_urls_to_base64(image_urls):

return [image_url_to_base64(url) for url in image_urls]

api_key = "YOUR_API_KEY"

url = "https://api.segmind.com/v1/svd"

# Request payload

data = {

"image": image_url_to_base64("https://segmind-sd-models.s3.amazonaws.com/outputs/svd_input.png"), # Or use image_file_to_base64("IMAGE_PATH")

"fps": 7,

"motion": 127,

"seed": 452361789,

"cond_aug": 0.1,

"frames": 14,

"resize_method": "maintain_aspect_ratio",

"base64": False

}

headers = {'x-api-key': api_key}

response = requests.post(url, json=data, headers=headers)

print(response.content) # The response is the generated imageAttributes

Input Image.

Number of frames per second

min : 1,

max : 60

to control the motion of the generated video

min : 1,

max : 180

Seed for video generation.

min : -1,

max : 999999999999999

noise added to the conditioning image

min : 0,

max : 1

No of frames in output video

Decides the output video dimensions

Allowed values:

Base64 encoding of the output video.

To keep track of your credit usage, you can inspect the response headers of each API call. The x-remaining-credits property will indicate the number of remaining credits in your account. Ensure you monitor this value to avoid any disruptions in your API usage.

Stable Diffusion Video (SVD)

Stable Diffusion Video (SVD) represents a significant advancement in the field of AI-driven video generation. SVD enables the creation of high-quality video content through a process known as latent diffusion. This is done by mapping video frames into a latent space where complex patterns and structures can be analyzed and manipulated with unprecedented precision.

At its core, SVD utilizes a deep neural network trained on vast datasets to understand and replicate the nuances of video dynamics. The model’s ability to interpolate between frames results in smooth transitions and realistic motion, even in scenarios where input data is sparse. This makes SVD particularly adept at tasks such as video upscaling, frame rate conversion, and even generating new content based on textual descriptions.

One of the standout features of SVD is its efficiency. By optimizing the diffusion process, SVD reduces the computational load typically associated with video generation. This opens up new possibilities for creators who can now produce high-fidelity videos without the need for extensive hardware resources.

Other Popular Models

sdxl-img2img

SDXL Img2Img is used for text-guided image-to-image translation. This model uses the weights from Stable Diffusion to generate new images from an input image using StableDiffusionImg2ImgPipeline from diffusers

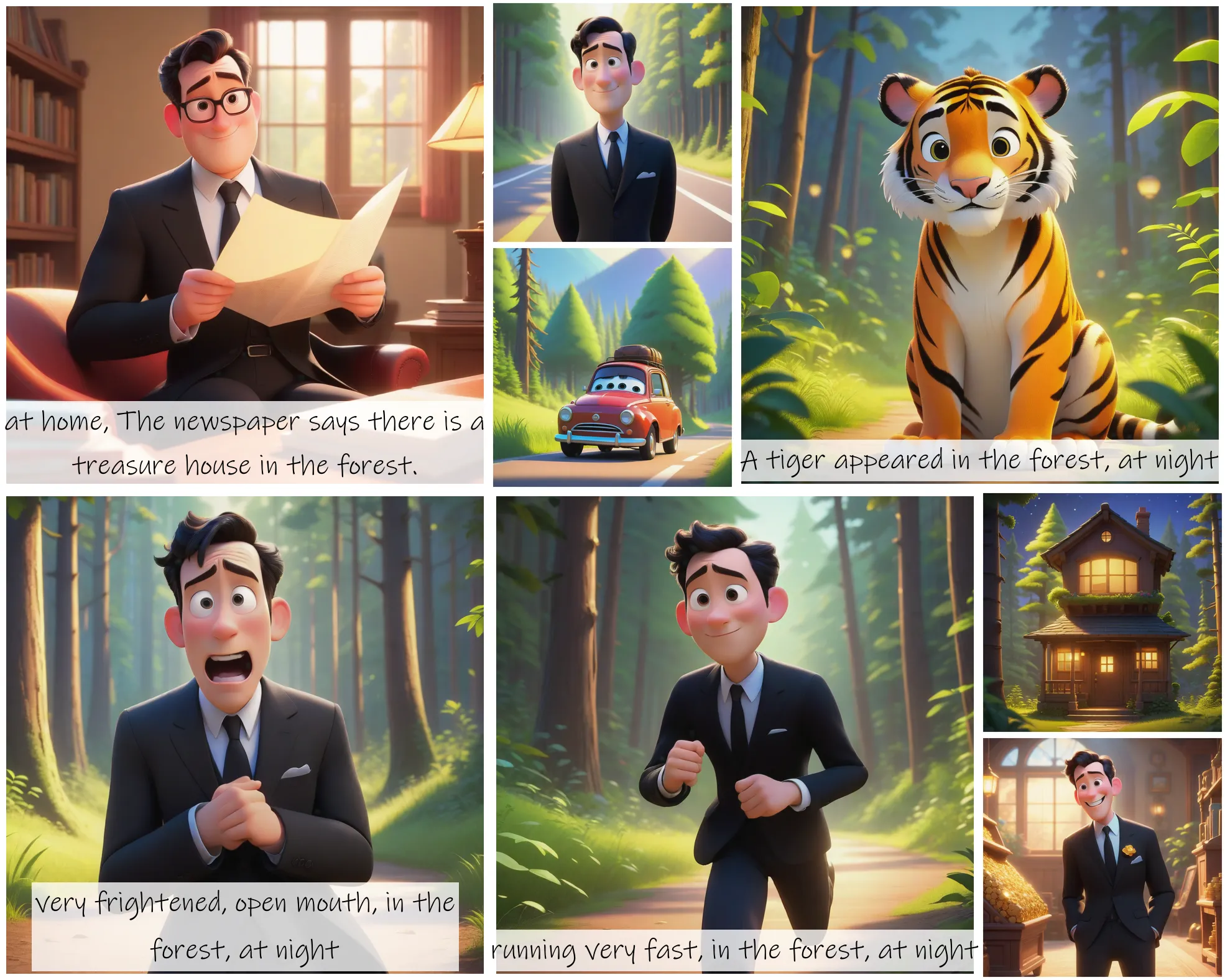

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

fooocus

Fooocus enables high-quality image generation effortlessly, combining the best of Stable Diffusion and Midjourney.

sd2.1-faceswapper

Take a picture/gif and replace the face in it with a face of your choice. You only need one image of the desired face. No dataset, no training