PixelFlow allows you to use all these features

Unlock the full potential of generative AI with Segmind. Create stunning visuals and innovative designs with total creative control. Take advantage of powerful development tools to automate processes and models, elevating your creative workflow.

Segmented Creation Workflow

Gain greater control by dividing the creative process into distinct steps, refining each phase.

Customized Output

Customize at various stages, from initial generation to final adjustments, ensuring tailored creative outputs.

Layering Different Models

Integrate and utilize multiple models simultaneously, producing complex and polished creative results.

Workflow APIs

Deploy Pixelflows as APIs quickly, without server setup, ensuring scalability and efficiency.

Text-Embedding-3-Large

Text-embedding-3-large is a robust language model by OpenAI designed for generating high-dimensional text embeddings. These embeddings provide sophisticated numerical representations of text data and are optimized for a wide range of natural language processing (NLP) tasks including semantic search, text clustering, and classification. The model's large size ensures enhanced accuracy and depth of understanding, making it suitable for applications requiring high-quality text representation.

How to Fine-Tune Outputs?

Input Text Length: Balance text length according to the specific task requirements. Short texts may not capture enough context, while very long texts might need truncation or summarization strategies.

Use Cases

Text-embedding-3-Large is versatile and can be deployed in numerous NLP applications:

-

Semantic Search: Enhance search engines by leveraging embeddings to measure similarity between user queries and documents.

-

Text Classification: Use embeddings as input features for training machine learning models in various classification tasks.

-

Clustering and Topic Modeling: Employ clustering algorithms on embeddings to identify topics or group similar texts in a corpus.

-

Recommendation Systems: Improve recommendation accuracy by computing and comparing embeddings of user queries and item descriptions.

Other Popular Models

sdxl-controlnet

SDXL ControlNet gives unprecedented control over text-to-image generation. SDXL ControlNet models Introduces the concept of conditioning inputs, which provide additional information to guide the image generation process

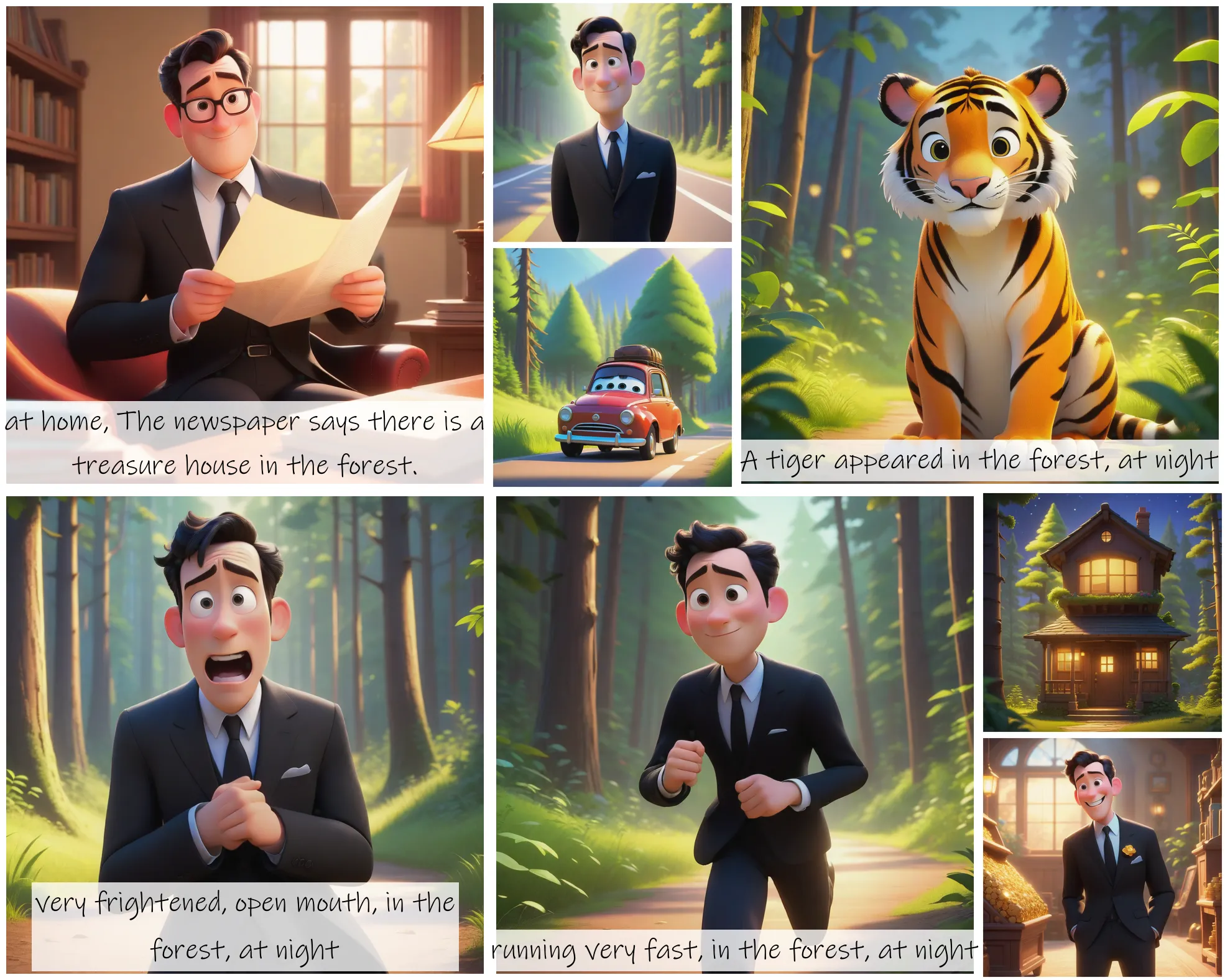

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

face-to-many

Turn a face into 3D, emoji, pixel art, video game, claymation or toy

sd2.1-faceswapper

Take a picture/gif and replace the face in it with a face of your choice. You only need one image of the desired face. No dataset, no training