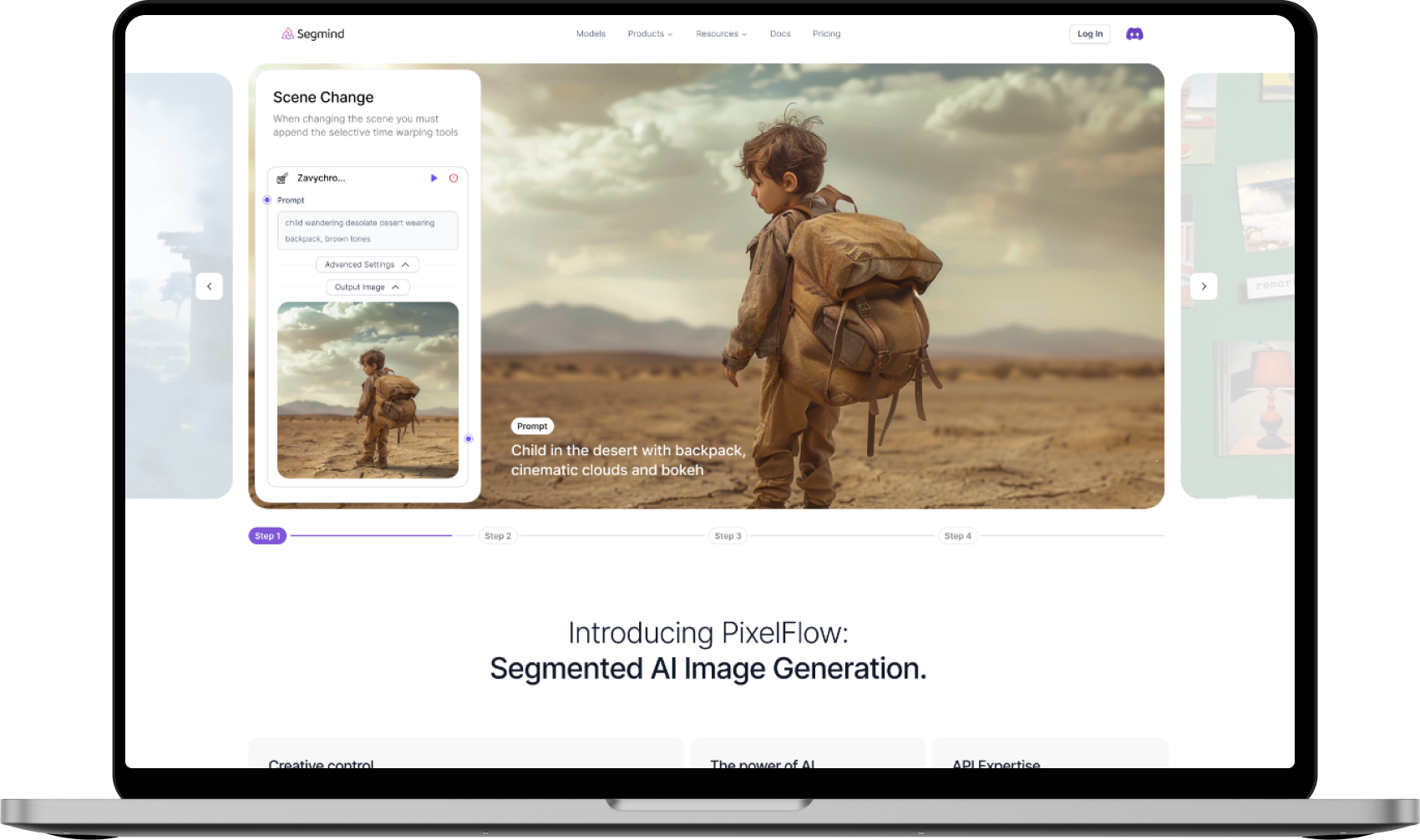

Generate and compare top image gen models, using enhanced prompts by Llama 3.1 8b

The Generate and Compare Image Outputs workflow is a technical process designed for evaluating the visual outputs of different image generation models. By using this workflow, one can critically assess the performance of models like Flux, Auraflow, and SDXL based on the same input prompts, ensuring informed decisions in model selection and application.

Models Used in the Pixelflow

flux-realism-lora

Flux Realism Lora with upscale, developed by XLabs AI is a cutting-edge model designed to generate realistic images from textual descriptions.

flux-dev

Flux Dev is a 12 billion parameter rectified flow transformer capable of generating images from text descriptions

flux-schnell

Flux Schnell is a state-of-the-art text-to-image generation model engineered for speed and efficiency.

llama-v3p1-8b-instruct

Meta developed and released the Meta Llama 3 family of large language models (LLMs), a collection of pretrained and instruction tuned generative text models in 8 and 70B sizes. The Llama 3 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry benchmarks.

aura-flow

Largest completely open sourced flow-based generation model that is capable of text-to-image generation

stable-diffusion-3-medium-txt2img

Stable Diffusion is a type of latent diffusion model that can generate images from text. It was created by a team of researchers and engineers from CompVis, Stability AI, and LAION. Stable Diffusion v2 is a specific version of the model architecture. It utilizes a downsampling-factor 8 autoencoder with an 865M UNet and OpenCLIP ViT-H/14 text encoder for the diffusion model. When using the SD 2-v model, it produces 768x768 px images. It uses the penultimate text embeddings from a CLIP ViT-H/14 text encoder to condition the generation process.

sdxl1.0-newreality-lightning

NewReality Lightning SDXL is a lightning-fast text-to-image generation model. It can generate high-quality 1024px images in a few steps.

sdxl1.0-colossus-lightning

Colossus Lightning SDXL is a lightning-fast text-to-image generation model. It can generate high-quality 1024px images in a few steps.

sdxl1.0-realism-lightning

Realism Lightning SDXL is a lightning-fast text-to-image generation model. It can generate high-quality 1024px images in a few steps.

ssd-1b

The Segmind Stable Diffusion Model (SSD-1B) is a distilled 50% smaller version of the Stable Diffusion XL (SDXL), offering a 60% speedup while maintaining high-quality text-to-image generation capabilities. It has been trained on diverse datasets, including Grit and Midjourney scrape data, to enhance its ability to create a wide range of visual content based on textual prompts.