API

If you're looking for an API, you can choose from your desired programming language.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

import requests

api_key = "YOUR_API_KEY"

url = "https://api.segmind.com/v1/ltx-video"

# Prepare data and files

data = {}

files = {}

data['cfg'] = 3

data['seed'] = 2357108

# For parameter "image", you can send a raw file or a URI:

# files['image'] = open('IMAGE_PATH', 'rb') # To send a file

data['image'] = 'null' # To send a URI

data['steps'] = 30

data['length'] = 97

data['prompt'] = "A woman with blood on her face and a white tank top looks down and to her right, then back up as she speaks. She has dark hair pulled back, light skin, and her face and chest are covered in blood. The camera angle is a close-up, focused on the woman's face and upper torso. The lighting is dim and blue-toned, creating a somber and intense atmosphere. The scene appears to be from a movie or TV show"

data['target_size'] = 640

data['aspect_ratio'] = "3:2"

data['negative_prompt'] = "low quality, worst quality, deformed, distorted"

headers = {'x-api-key': api_key}

# If no files, send as JSON

if files:

response = requests.post(url, data=data, files=files, headers=headers)

else:

response = requests.post(url, json=data, headers=headers)

print(response.content) # The response is the generated imageAttributes

How strongly the video follows the prompt

min : 1,

max : 20

Set a seed for reproducibility. Random by default.

Optional input image url to use as the starting frame

Number of steps

min : 1,

max : 50

Length of the output video in frames

Allowed values:

Text prompt for the video. This model needs long descriptive prompts, if the prompt is too short the quality won't be good.

Target size for the output video

Allowed values:

Aspect ratio of the output video. Ignored if an image is provided.

Allowed values:

Things you do not want to see in your video

To keep track of your credit usage, you can inspect the response headers of each API call. The x-remaining-credits property will indicate the number of remaining credits in your account. Ensure you monitor this value to avoid any disruptions in your API usage.

LTX Video

LTX-Video is an innovative video generation model developed by Lightricks, leveraging a DiT (Diffusion Transformer) architecture to produce high-quality videos in real-time. Capable of generating 24 frames per second (FPS) at a resolution of 768x512, this model is designed for efficiency, producing content faster than viewing time. Trained on a diverse and extensive dataset, LTX-Video excels in creating realistic and varied video content, making it a significant advancement in the field of AI-generated media.

Key Features LTX Video

-

Real-Time Generation: Generates videos at 24 FPS, ensuring seamless playback.

-

High Resolution: Produces videos at 768x512 resolution, suitable for various applications.

-

Diverse Content Creation: Trained on a large-scale dataset to ensure a wide range of video styles and themes.

How to use LTX Video

To maximize the effectiveness of LTX-Video, crafting detailed prompts is essential. Consider the following structure:

-

Start with the main action.

-

Include specific movements and gestures.

-

Describe character and object appearances precisely.

-

Add background and environmental details.

-

Specify camera angles and movements.

-

Detail lighting and color schemes.

-

Note any significant changes or events.

This structured approach will enhance the quality of generated videos by providing clear guidance to the model.

Best settings for LTX Video

-

Resolution Preset: Use resolutions divisible by 32; keep below 720x1280 for optimal performance.

-

Guidance Scale: Recommended values between 3 and 3.5 for balanced output

-

Inference Steps: Use more than 40 steps for quality; fewer than 30 for speed

Other Popular Models

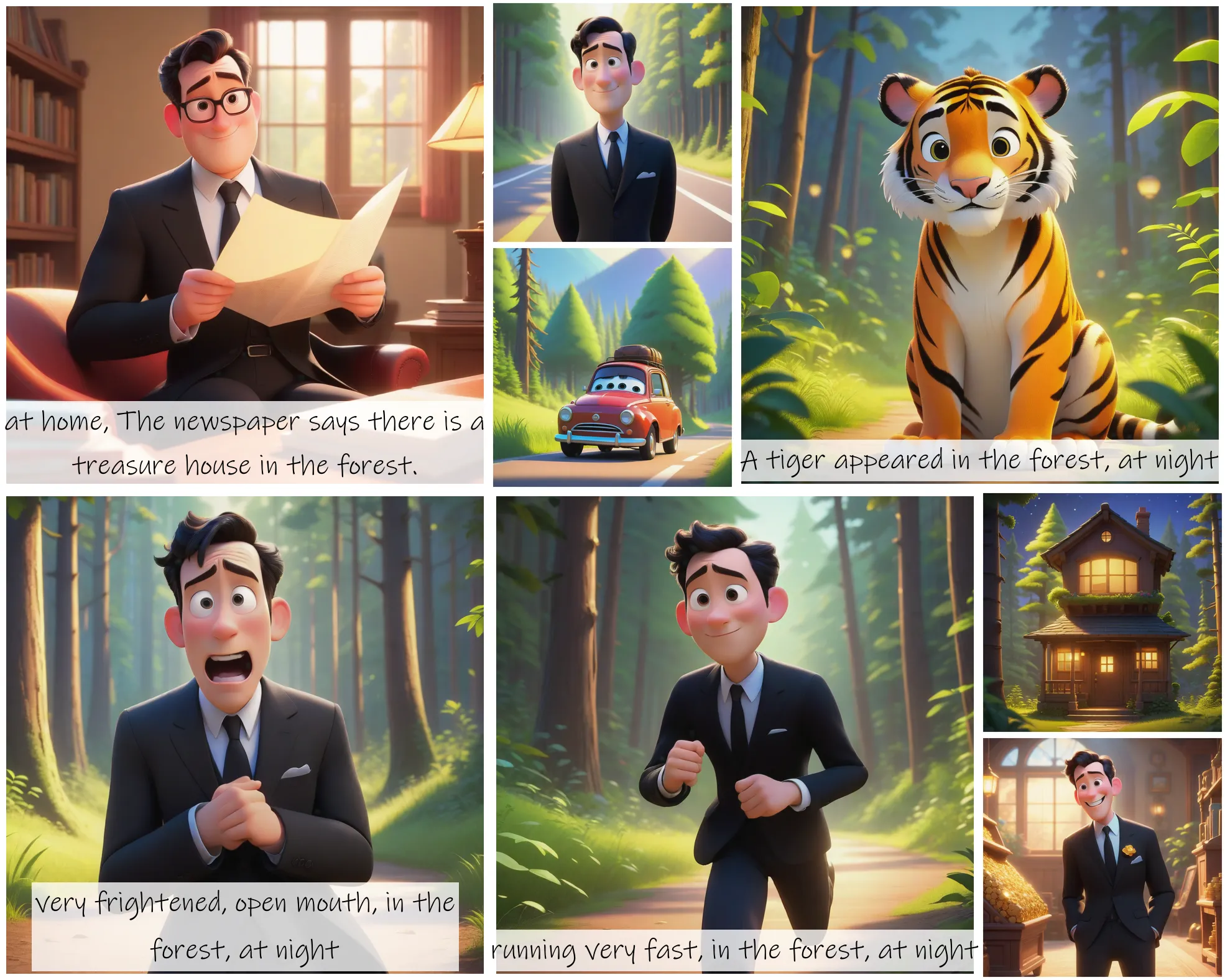

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

sdxl-inpaint

This model is capable of generating photo-realistic images given any text input, with the extra capability of inpainting the pictures by using a mask

codeformer

CodeFormer is a robust face restoration algorithm for old photos or AI-generated faces.

sd2.1-faceswapper

Take a picture/gif and replace the face in it with a face of your choice. You only need one image of the desired face. No dataset, no training